I downloaded the SolrNet source code so I could compare the performance of indexing documents using the SolrNet and SolrJ clients, and then built the code. Next, I created a simple console application and referenced the SolrNet library. I then created a method that was basically a copy of the Java code I used (while using SolrJ) for reading in a CSV file, and indexing batches of "documents". The SolrJ version used POJOs (Plain Old Java Objects) with annotations specifying which Solr fields that the properties map to. The SolrNet version used POCOs (Plain Old CLR Objects) with annotations specificying which Solr fields that the properties map to.

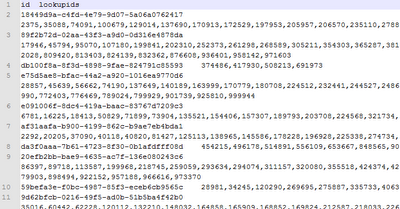

Here is an example of the POCO I used:

public class TestRecord

{

[SolrUniqueKey("id")]

public string ID { get; set; }

[SolrField("lookupids")]

public List<int> LookupIDs { get; set; }

}

Here is an example of the code that indexed the values of the test records in batches:

// the following line of code:

// Startup.Init<TestRecord>("http://localhost:8983/solr");

public static void AddValues(List<TestRecord> testRecords)

{

var solr = ServiceLocator.Current.GetInstance<ISolrOperations<TestRecord>>();

solr.AddRange(testRecords);

solr.Commit();

}

It seems to index the data about as fast as the SolrJ code - which isn't terribly surprising. It appeared that it was slightly slower, but I will need to run multiple tests of varying batch sizes to see how similar or different the results are between SolrNet and SolrJ.

It took ~2.5 minutes to index 100000 documents when using batches of 100 test records, ~50 seconds for batches of 1000 test records, and ~45 seconds for batches of 10000 test records.

SolrNet is very easy to write code for querying against, or indexing into, a Solr index. I was very pleased!