Do you have an interest in Raspberry Pis and cluster computing? Me too!

Parts

One thing that I have enjoyed about building the Raspberry Pi cluster is that it's inexpensive to build up over time.

I bought the Raspberry Pis and the PoE hats from Canakit, and everything else was bought from Amazon. Amazon had higher prices for the Raspberry Pi PoE hat, and didn't have a Raspberry Pi 4B 8GB board available when I first started buying the parts.

Here is a list of what I bought for the cluster.

| Part |

My Choice |

Qty |

Links |

| Case |

Cloudlet Cluster Case |

1 |

Amazon |

| Raspberry Pis |

RPi 4B+ 8GB |

5 |

Canakit

Amazon |

| PoE Hats |

Raspberry Pi PoE HAT |

5 |

Canakit

Amazon |

| SD Cards |

SanDisk 128GB microSDXC |

5 |

Amazon |

| Network Switch |

TP-Link 8 Port PoE Switch |

1 |

Amazon |

| Network Cables |

Cat7 1FT Multi-Color |

1 x 5pk |

Amazon |

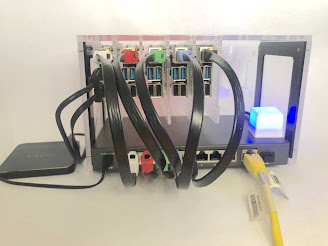

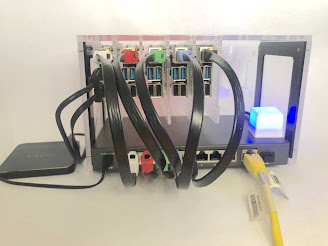

Case

I used the Cloudlet Cluster Case by C4Labs.

|

| Very tiny, but fulfilling, RPi cluster. |

It practically hums like the WOPR!

I found the case by searching on Amazon for "Raspberry Pi Cluster Case', and the Cloudlet Cluster Case was the first result I saw that I really liked. I like the look of the stackable cases, but the Cloudlet Cluster Case reminded me of a very tiny computer rack - it felt right.

The case has mounting boards and hardware for 8 Raspberry Pis. You mount the Raspberry Pi onto the acrylic board, and then the mounting board easily snaps into the case.

This is great for allowing you to start very small and expand as you would like. The price might seem like a considerable jump from the stackable case options but I still picked the Cloudlet Cluster Case because I think it looks nice, it's very sturdy, and had enough room for the network switch.

You can also see a blue square in the image above - that's the Blinkstick Square with an enclosure. I plan to set up monitoring for the cluster, and for a variety of webhooks, and use the Blinkstick Square for showing status. I figured I would use white, red, green, blue, and purple to indicate which Pi/Node the status was for.

Raspberry Pis

Originally I was going to build a cluster from a couple old Raspberry Pis I have that I hadn't been using, but I bought a Raspberry Pi 4 bundle for my daughter and the performance is so good that I decided to get the newer Pis instead.

I would have liked to have bought the Raspberry Pis from Amazon, because I appreciate the customer service you get from Amazon. However, I have had great luck with smaller businesses that sell Raspberry Pi products, and usually they have better prices than what you would good from Amazon. Canakit had Raspberry Pi 4B 8GB boards available before Amazon, and the price is about $15 cheaper. Vilros and the PiShop also had Raspberry Pi 4B 8GB boards listed, but had the same price as Canakit.

PoE Hats

The price was better than most options, and I assumed there would be more testing around the official option. Also, one PoE hat that I looked at seemed to have a nicer profile but the seller suggests buying a fan for it. The official Raspberry Pi PoE hats come with fans attached and there is no issues with clearance in the Cloudlet Case.

SD Cards

I bought 128 GB SD cards for each of the Pis. I didn't need SD cards that hold that much data because I can attach an external HDDs or SSDs to add storage. If I were to do this again, then I think I would buy smaller SD cards, and use the saved money to go towards external drives.

Network Switch

I bought an 8 port PoE switch from TPLink mainly because it was the cheaper option between it and a Netgear 8 port PoE switch. The TPLink switch is $30 cheaper than the Netgear equivalent. I had no problems at all - it works great with the Raspberry Pi PoE hats. There were no special configurations for the Raspberry Pi, no jumper settings for the PoE hat, and nothing to configure for the switch. Just connect all the things.

There will be a post coming soon that will list the steps I took to set up the Raspberry Pis and get K3s installed. It was relatively simple, but not completely hassle-free. The first time I was able to see that all nodes were running and available to the cluster made it worth it.